Warnings may help flag fake news, but they also backfire

Study tests how well disclaimers and media logos help

Unfortunately, news seldom comes with a clear warning about whether what you’re about to read is real news or just some hoax.

cbies/iStockphoto

Share this:

- Share via email (Opens in new window) Email

- Click to share on Facebook (Opens in new window) Facebook

- Click to share on X (Opens in new window) X

- Click to share on Pinterest (Opens in new window) Pinterest

- Click to share on Reddit (Opens in new window) Reddit

- Share to Google Classroom (Opens in new window) Google Classroom

- Click to print (Opens in new window) Print

More and more, bogus news stories on Facebook and other social media have painted politicians in a false light. Some recent examples have claimed — falsely — to have turned up examples of bad behavior by political candidates or public officials. Others portray politicians as heroes for deeds they never did. Either way, fake news erodes trust in the news media. And tall tales can sway public opinion.

Identifying and flagging potentially false headlines with some type of warning has been suggested as a way to help people spot fake news. But that only works a little, says a new report. Some steps might even backfire.

“Labeling headlines is not going to be enough to address a problem of this seriousness,” says David Rand. He’s a psychologist at Yale University in New Haven, Conn. Rand worked on the new research with Gordon Pennycook. He’s also a psychologist at Yale.

Even if a tiny percentage of people believe fake news, there are still lots of people online to fall for it. Just one click can spread a fake news story to thousands of people. Fake news “has real effects,” says Charles Seife. He’s a science journalist trained in mathematics. Seife teaches journalism at New York University in New York City and did not work on the new study.

Ideally, folks should stop writing and spreading lies online. That’s not likely to happen, however. People write fake news to manipulate others or rake in money. And it’s too easy to fall for the lies and spread them, thinking they’re the truth. So Rand and Pennycook tested how well different cues might help people recognize real headlines from fake ones. That could help to stop the spread of these falsehoods. They described their results in a paper posted with the online research library SSRN on September 14.

To warn or not to warn

Rand and Pennycook looked at whether warnings help people detect made-up stories. They asked 5,271 people to rate the accuracy of 12 true and 12 false news headlines. All the fake headlines came from Snopes.com. That’s a fact-checking website. All the headlines were shown as if they were on Facebook. Each had a picture, headline, opening sentence and a source.

The team ran its experiment five times with different subgroups of the larger group. Some people were in control groups. Their responses would be the ones against which all of the others would be compared. The control groups saw the headlines in Facebook format, with no other cues.

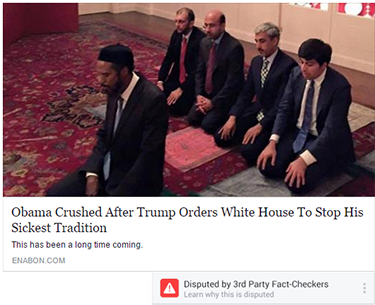

The set of headlines presented to the other groups included six fake stories that included a warning at the bottom. An exclamation point was inside a red box. Words next to that warning symbol said: “Disputed by 3rd Party Fact-Checkers.” Headlines for another six false stories in the set had no warning. The 12 real headlines had no warnings.

The results were discouraging. People in the control groups identified accurate news headlines only about 60 percent of the time. And they wrongly believed 18.5 percent of the fake headlines.

With warnings, the overall share of instances in which people wrongly believed false headlines went down. Now only 14.8 percent said fake headlines were true. “It had some effect, but that is not a big effect,” Rand notes.

The effect varied with whether people said they had preferred Republican Donald Trump or Democrat Hillary Clinton in the last U.S. presidential election. In particular, Trump supporters were substantially more likely than Clinton supporters to think fake news headlines without warnings were true. Trump supporters also were a bit less likely to heed a warning when it was shown.

Age, too, seemed to play some role. People ages 18 to 25 weren’t substantially better than older ones at identifying bogus stories from headlines with warnings. The younger people in the study also thought headlines without warnings were substantially more accurate than the control groups did. In other words, the younger group seemed more likely to see the lack of a warning as some kind of endorsement (that a story was likely true).

Says who?

Rand’s team also toyed with making the source of a news story more prominent. Why? Who published an article may offer a clue to its accuracy. Different news outlets have different reputations for accuracy. Most importantly, better news outlets generally require reporters to follow a code of professional ethics. And those outlets publish corrections of factual errors. Some news outlets, blogs or propaganda sources (such as trade groups or political pranksters) may publish something that might boost the reputation of candidates they favor or smear the reputation of their opponents.

As before, all study subjects in this second experiment saw headlines for 12 true and 12 false news stories. And all headlines were in the same Facebook format. But now the people in the treatment groups also saw the publishing outlet’s logo beneath the headline.

Alas, that tag seemed to have no real effect on how well people could tell fact from fiction, the researchers found.

This new report comes with caveats. For one thing, the report notes that the work wasn’t peer-reviewed. In that process, other researchers in a field carefully read and critique a study before its publication. Skipping this step can save some time in getting results out. But the lack of peer review means a study “hasn’t gone through that first set of sanity checks,” says Seife.

He also questions where the Yale team found its test subjects. Amazon Mechanical Turk is a website that pays people to do different tasks online. The researchers recruited participants from this site. Seife thinks the platform is “way overused” for research. “It’s a method of getting lots of participants cheaply,” he notes. “But you don’t know if they’re taking this survey seriously.”

People who know they’re in a study might also figure that they’ll see some fake headlines. That doesn’t necessarily happen in real life, Seife notes.

Despite those issues, “I do think [the new study] is significant.” Seife says. He also appreciates that it looked at the potential problems of labeling suspicious stories as being “suspect.” Indeed, he notes, “People might come to rely on the label and not spend their efforts determining themselves whether something is real or not.” Warnings could very well backfire.

As for pulling participants from Amazon Mechanical Turk, Rand notes that those people spend lots of time online. As he sees it, they may not be very different from folks who use Facebook a lot and rely on it for news. Also, he adds, Facebook has generally been unwilling to cooperate with researchers who want to use its site for studies. Both he and Seife hope Facebook might cooperate more in the future.

Stop and think

In any case, Seife says, people shouldn’t rely on warnings to tell them if something is true or not. “The task is just too big,” he says. There are far more fake stories out there than fact-checkers have time to check. “You at some point have to exercise your own judgment.”

Rand agrees that critical thinking is crucial. For an earlier project, he and Pennycook gave people a test called the Cognitive Reflection Test. It measures whether people are more likely to stop and think about problem questions or to go with their first gut response. For example, suppose a ball and a bat cost $1.10 in total. If the bat costs $1 more than the ball, how much does the ball cost? (Hint: Ten cents is the wrong answer.)

People who did better on the test were better at spotting fake news, the team found. People who did worse on the test also were somewhat more likely to prefer Trump over Clinton. The researchers shared those results in SSRN on August 23.

“This is all correlation,” cautions Rand. And the existence of a correlation does not mean one thing causes another. Nonetheless, he notes, the Trump supporters in that study “were less likely to engage in analytical thinking and therefore less likely to be able to tell fake from real news.” Political party differences on the thinking test didn’t seem to show up before last year’s campaign, he adds. His study was not designed to test why such a change might have happened.

Ultimately, people who publish fake stories online aim to mislead people. If you don’t want to be duped, “stop and reflect on your first responses,” Rand says. “Cultivating that analytical thinking ability is critical to protecting yourself from manipulation.”