Explainer: Understanding the size of data

How big is big? A handy guide to understanding large quantities of information

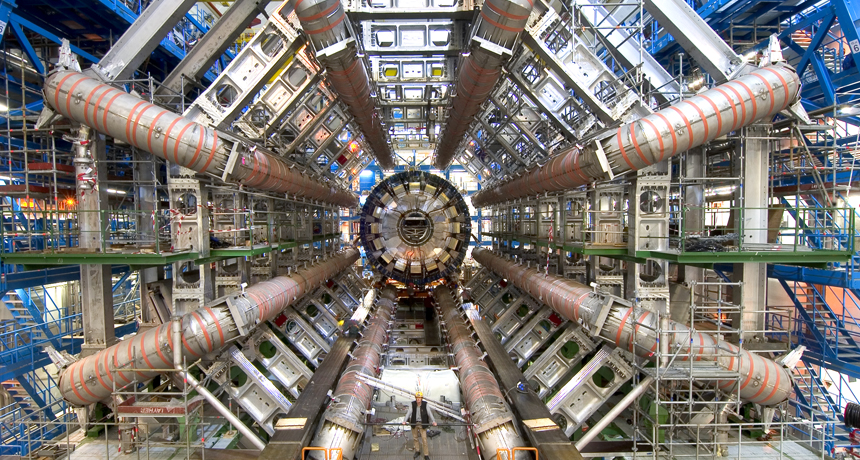

The Large Hadron Collider, an underground particle collider in Europe, can produce 42 terabytes of data a day.

Maximilien Brice, CERN

Share this:

- Share via email (Opens in new window) Email

- Click to share on Facebook (Opens in new window) Facebook

- Click to share on X (Opens in new window) X

- Click to share on Pinterest (Opens in new window) Pinterest

- Click to share on Reddit (Opens in new window) Reddit

- Share to Google Classroom (Opens in new window) Google Classroom

- Click to print (Opens in new window) Print

Scientific reports on big data seem to borrow terms from an alien language. Consider the large amount of data generated when experiments are running at the Large Hadron Collider. This enormous scientific instrument in Geneva, Switzerland, can generate 42 terabytes of data a day. The National Climatic Data Center in Asheville, N.C., stores more than 6 petabytes of climate data from ships, buoys, weather balloons, radars, satellites and computer models. (By 2020, the center expects to have 20 petabytes.) Experts estimate — it would be impossible to pin down an exact number — that we’d need at least 1,200 exabytes to store all the world’s existing data

It is difficult to quickly understand what these numbers mean. Even experts admit these sizes are staggering. “There is no good way to think about what this size of data means,” write Viktor Mayer-Schönberger and Kenneth Cukier in their 2013 book, Big Data. Mayer-Schönberger is an expert on information law at Oxford University in England. Cukier is a journalist who writes about data for magazines.

To get a grip on these numbers, start small. A byte is a typical unit of computer memory. Storing one letter of the alphabet or number usually takes one or two bytes of memory. To go larger, remember your powers of 2. A kilobyte, or 1,024 bytes, contains 2 to the 10th power — or 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 (abbreviated as 210). A megabyte contains 220 bytes, or slightly more than one million bytes. That’s enough memory to store a short novel. An mp3 download of an average pop song occupies about 4 megabytes on a digital device. A large photograph may need 5 megabytes, or the same amount of storage as the entire works of William Shakespeare.

Next in size is the gigabyte. It’s 230 — or 1,073,741,824 bytes. That’s enough memory to hold a 90-minute movie, about 250 songs or all the words contained in a shelf of books just over 18 meters (60 feet) long. Many smartphones come with 16 gigabytes of memory or more. Next in size is a terabyte, or 240 bytes. In 2000, scientists estimated that the entire printed contents of the U.S. Library of Congress would take up 10 terabytes of memory. And a petabyte (250 bytes) of memory could hold roughly one copy of all of the printed information in the world. Even larger still: exabytes (250 bytes), zettabytes (260) and yottabytes (270).

If printed in book form, all the data stored in the world — all 1,200 exabytes — would completely cover the planet in a layer 52 books deep.

Now picture how information was stored more than 2,000 years ago. Back then, the ancient Greeks built a sprawling library in Alexandria, Egypt. It probably resembled a modern university. It would have had places to walk, talk, think and read. The library’s goal was impressive: Collect in one place all of the written works in existence. By some estimates, the library at Alexandria housed, at one time, hundreds of thousands of scrolls gathered and copied from throughout the parts of the world known to the Greeks.

By today’s standards, however, that great library was tiny. If all the stored data now in existence were divvied up equally, every single person alive today would receive more than 300 times as much information as had been housed in the library of Alexandria.