Talking through a tube can trick AI into mistaking one voice for another

Current voice-identification systems don’t protect against this type of hack

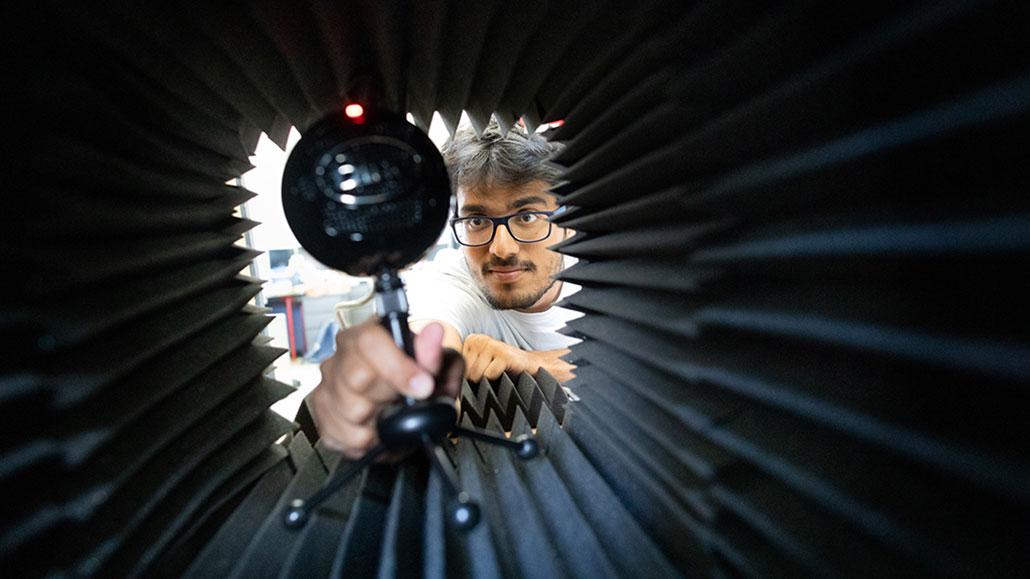

When people spoke through a simple tube with certain dimensions, a voice-recognition AI model wrongly tagged them as famous celebrities! Here, team member Yash Wani, an engineering PhD student at the University of Wisconsin–Madison, sets up a microphone to capture sound during a test of that system.

Todd Brown/University of Wisconsin–Madison

Share this:

- Share via email (Opens in new window) Email

- Click to share on Facebook (Opens in new window) Facebook

- Click to share on X (Opens in new window) X

- Click to share on Pinterest (Opens in new window) Pinterest

- Click to share on Reddit (Opens in new window) Reddit

- Share to Google Classroom (Opens in new window) Google Classroom

- Click to print (Opens in new window) Print

Grab a paper towel tube and talk through it. Sounds weird, huh? Your wacky tube voice probably wouldn’t fool your family or friends into thinking you were someone else. But it could trick a computer, new research finds.

In an experiment, people used specially designed tubes to make their voices sound like someone else’s. Those fake voices could fool an artificial intelligence, or AI, model built to identify voices. Since voice-recognition AI is used to guard many bank accounts and smartphones, criminals could craft tubes to hack those accounts, says Kassem Fawaz. He’s an engineer at the University of Wisconsin–Madison.

He was part of a team that presented its findings in August at the USENIX Security Symposium in Anaheim, Calif.

“This was really creative,” David Wagner says of the new study. He was not involved in this new research. But he is a security expert at the University of California, Berkeley. “This is another step,” he says, “in the cat-and-mouse game of [cybersecurity] defenders trying to recognize people and attackers trying to fool the system.”

Mystique

Shimaa Ahmed wondered if a simple device could alter someone’s voice. First, she tried putting her hands over her mouth. Then she grabbed a paper-towel tube. And surprise: “It actually worked to fool the [artificial-intelligence] model.” Over the next 18 months, her team developed Mystique — a plastic version seen here.

Tricky tubes

Bad guys have already found ways to hack voice-ID systems. Typically they do this to steal from bank accounts. Most often, they use what’s known as deepfake software. It uses AI to create new speech that mimics the owner of the targeted bank account.

In response, many voice-ID systems have added protections. They check whether there’s any digital trickery behind a voice. But Fawaz’s team realized that these systems weren’t checking for non-digital tricks — such as tubes.

Tubes can alter someone’s voice by tampering with sound waves. The sound of a voice contains waves of many different frequencies. Each frequency is a different pitch. As sound waves travel through a tube, the tube vibrates. The way it vibrates makes some pitches louder and others softer. And the way this alters pitches will depend on the length and width of the tube.

So Fawaz’s team came up with a math equation. It told them what tube dimensions would alter one person’s voice to sound like another’s, at least according to an AI model. The two starting voices couldn’t be too wildly different, though. For example, a person with a male-sounding voice usually couldn’t use a tube to impersonate someone with a female-sounding voice, and vice versa.

The team didn’t try to hack anyone’s account. Instead, they tested out their tube trickery on AI models trained to recognize celebrity voices. Fourteen volunteers tried this out using a set of three 3D-printed tubes.

“Each participant who tried our system could impersonate some of the celebrities in the dataset,” says Shimaa Ahmed. She’s a PhD student at UW–Madison who works with Fawaz.

One person’s tube voice mimicked singer Katy Perry. Another volunteer got to impersonate Bollywood star Akshay Kumar. The tube voices fooled the AI models “60 percent of the time on average,” Ahmed says.

Listen: Same or different?

Which of these pairs of voices are the same speaker? Which pair is two different people? A tube changes someone’s voice, but the changes don’t easily trick people.

Pair 1

Pair 2

A different way of learning

People, however, weren’t so easily duped.

The researchers presented a separate group of volunteers with tube-altered voices paired with the celebrity voices they were meant to mimic. The volunteers thought the two voices were the same person a mere 16 percent of the time.

The reason AI was easier to fool is that it doesn’t learn to recognize voices the same way we do. To learn to recognize some voice, AI models must study training data — such as a set of celebrity voice recordings. And they can only learn what’s in their training data. People don’t often talk through tubes. So this feature is missing from training data.

Now that this clever tube hack has been revealed, other engineers can get started testing the hack on the voice-ID systems that many people use with their banks and personal devices. Their goal is to learn ways to prevent such tube attacks, explains Wagner.

Meanwhile, Fawaz and his team are designing more elaborate devices involving multiple tubes or twisted shapes. These could make it possible to transform voices in more extreme ways. Could it be possible for anyone to mimic anyone else using one of these? Stay tubed, er, tuned.