Think twice before using ChatGPT for help with homework

This new AI tool talks a lot like a person — but still makes mistakes

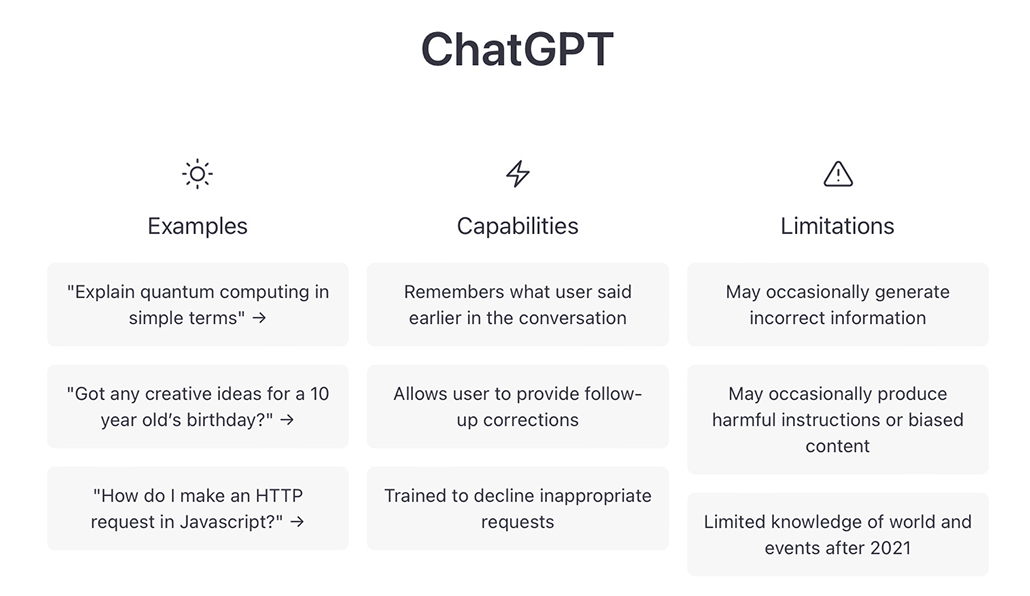

ChatGPT is impressive and can be quite useful. It can help people write text, for instance, and code. However, “it’s not magic,” says Casey Fiesler. In fact, it often seems intelligent and confident while making mistakes — and sometimes parroting biases.

Glenn Harvey

Share this:

- Share via email (Opens in new window) Email

- Click to share on Facebook (Opens in new window) Facebook

- Click to share on X (Opens in new window) X

- Click to share on Pinterest (Opens in new window) Pinterest

- Click to share on Reddit (Opens in new window) Reddit

- Share to Google Classroom (Opens in new window) Google Classroom

- Click to print (Opens in new window) Print

“We need to talk,” Brett Vogelsinger said. A student had just asked for feedback on an essay. One paragraph stood out. Vogelsinger, a 9th-grade English teacher in Doylestown, Pa., realized that the student hadn’t written the piece himself. He had used ChatGPT. It’s a new artificial intelligence (AI) tool. It answers questions. It writes code. And it can generate long essays and stories.

The company OpenAI made ChatGPT available for free at the end of November 2022. Within a week, it had more than a million users. Other tech companies are racing to put out similar tools. Google launched Bard in early February. The AI company Anthropic is testing a new chatbot named Claude. And another AI company, DeepMind, is working on a bot called Sparrow.

ChatGPT marks the beginning of a new wave of AI that will disrupt education. Whether that’s a good or bad thing remains to be seen.

Some people have been using ChatGPT out of curiosity or for entertainment. I asked it to invent a silly excuse for not doing homework in the style of a medieval proclamation. In less than a second, it offered me: “Hark! Thy servant was beset by a horde of mischievous leprechauns, who didst steal mine quill and parchment, rendering me unable to complete mine homework.”

But students can also use it to cheat. When Stanford University’s student-run newspaper polled students at the university, 17 percent said they had used ChatGPT on assignments or exams during the end of 2022. Some admitted to submitting the chatbot’s writing as their own. For now, these students and others are probably getting away with cheating.

And that’s because ChatGPT does an excellent job. “It can outperform a lot of middle-school kids,” Vogelsinger says. He probably wouldn’t have known his student used it — except for one thing. “He copied and pasted the prompt,” says Vogelsinger.

This essay was still a work in progress. So Vogelsinger didn’t see this as cheating. Instead, he saw an opportunity. Now, the student is working with the AI to write that essay. It’s helping the student develop his writing and research skills.

“We’re color-coding,” says Vogelsinger. The parts the student writes are in green. Those parts that ChatGPT writes are in blue. Vogelsinger is helping the student pick and choose only a few sentences from the AI to keep. He’s allowing other students to collaborate with the tool as well. Most aren’t using it regularly, but a few kids really like it. Vogelsinger thinks it has helped them get started and to focus their ideas.

This story had a happy ending.

But at many schools and universities, educators are struggling with how to handle ChatGPT and other tools like it. In early January, New York City public schools banned ChatGPT on their devices and networks. They were worried about cheating. They also were concerned that the tool’s answers might not be accurate or safe. Many other school systems in the United States and elsewhere have followed suit.

But some experts suspect that bots like ChatGPT could also be a great help to learners and workers everywhere. Like calculators for math or Google for facts, an AI chatbot makes something that once took time and effort much simpler and faster. With this tool, anyone can generate well-formed sentences and paragraphs — even entire pieces of writing.

How could a tool like this change the way we teach and learn?

The good, the bad and the weird

ChatGPT has wowed its users. “It’s so much more realistic than I thought a robot could be,” says Avani Rao. This high school sophomore lives in California. She hasn’t used the bot to do homework. But for fun, she’s prompted it to say creative or silly things. She asked it to explain addition, for instance, in the voice of an evil villain. Its answer is highly entertaining.

Tools like ChatGPT could help create a more equitable world for people who are trying to work in a second language or who struggle with composing sentences. Students could use ChatGPT like a coach to help improve their writing and grammar. Or it could explain difficult subjects. “It really will tutor you,” says Vogelsinger, who had one student come to him excited that ChatGPT had clearly outlined a concept from science class.

Teachers could use ChatGPT to help create lesson plans or activities — ones personalized to the needs or goals of specific students.

Several podcasts have had ChatGPT as a “guest” on the show. In 2023, two people are going to use an AI-powered chatbot like a lawyer. It will tell them what to say during their appearances in traffic court. The company that developed the bot is paying them to test the new tech. Their vision is a world in which legal help might be free.

@professorcasey Replying to @novshmozkapop #ChatGPT might be helpful but don’t ask it for help on your math homework. #openai #aiethics

♬ original sound – Professor Casey Fiesler

Xiaoming Zhai tested ChatGPT to see if it could write an academic paper. Zhai is an expert in science education at the University of Georgia in Athens. He was impressed with how easy it was to summarize knowledge and generate good writing using the tool. “It’s really amazing,” he says.

All of this sounds great. Still, some really big problems exist.

Most worryingly, ChatGPT and tools like it sometimes gets things very wrong. In an ad for Bard, the chatbot claimed that the James Webb Space Telescope took the very first picture of an exoplanet. That’s false. In a conversation posted on Twitter, ChatGPT said the fastest marine mammal was the peregrine falcon. A falcon, of course, is a bird and doesn’t live in the ocean.

ChatGPT can be “confidently wrong,” says Casey Fiesler. Its text, she notes, can contain “mistakes and bad information.” She is an expert in the ethics of technology at the University of Colorado Boulder. She has made multiple TikTok videos about the pitfalls of ChatGPT.

Also, for now, all of the bot’s training data came from before a date in 2021. So its knowledge is out of date.

Finally, ChatGPT does not provide sources for its information. If asked for sources, it will make them up. It’s something Fiesler revealed in another video. Zhai discovered the exact same thing. When he asked ChatGPT for citations, it gave him sources that looked correct. In fact, they were bogus.

Zhai sees the tool as an assistant. He double-checked its information and decided how to structure the paper himself. If you use ChatGPT, be honest about it and verify its information, the experts all say.

Under the hood

ChatGPT’s mistakes make more sense if you know how it works. “It doesn’t reason. It doesn’t have ideas. It doesn’t have thoughts,” explains Emily M. Bender. She is a computational linguist who works at the University of Washington in Seattle. ChatGPT may sound a lot like a person, but it’s not one. It is an AI model developed using several types of machine learning.

The primary type is a large language model. This type of model learns to predict what words will come next in a sentence or phrase. It does this by churning through vast amounts of text. It places words and phrases into a 3-D map that represents their relationships to each other. Words that tend to appear together, like peanut butter and jelly, end up closer together in this map.

Before ChatGPT, OpenAI had made GPT3. This very large language model came out in 2020. It had trained on text containing an estimated 300 billion words. That text came from the internet and encyclopedias. It also included dialogue transcripts, essays, exams and much more, says Sasha Luccioni. She is a researcher at the company HuggingFace in Montreal, Canada. This company builds AI tools.

OpenAI improved upon GPT3 to create GPT3.5. This time, OpenAI added a new type of machine learning. It’s known as “reinforcement learning with human feedback.” That means people checked the AI’s responses. GPT3.5 learned to give more of those types of responses in the future. It also learned not to generate hurtful, biased or inappropriate responses. GPT3.5 essentially became a people-pleaser.

During ChatGPT’s development, OpenAI added even more safety rules to the model. As a result, the chatbot will refuse to talk about certain sensitive issues or information. But this also raises another issue: Whose values are being programmed into the bot, including what it is — or is not — allowed to talk about?

OpenAI is not offering exact details about how it developed and trained ChatGPT. The company has not released its code or training data. This disappoints Luccioni. “I want to know how it works in order to help make it better,” she says.

When asked to comment on this story, OpenAI provided a statement from an unnamed spokesperson. “We made ChatGPT available as a research preview to learn from real-world use, which we believe is a critical part of developing and deploying capable, safe AI systems,” the statement said. “We are constantly incorporating feedback and lessons learned.” Indeed, some early experimenters got the bot to say biased things about race and gender. OpenAI quickly patched the tool. It no longer responds the same way.

ChatGPT is not a finished product. It’s available for free right now because OpenAI needs data from the real world. The people who are using it right now are their guinea pigs. If you use it, notes Bender, “You are working for OpenAI for free.”

Humans vs robots

How good is ChatGPT at what it does? Catherine Gao is part of one team of researchers that is putting the tool to the test.

At the top of a research article published in a journal is an abstract. It summarizes the author’s findings. Gao’s group gathered 50 real abstracts from research papers in medical journals. Then they asked ChatGPT to generate fake abstracts based on the paper titles. The team asked people who review abstracts as part of their job to identify which were which.

The reviewers mistook roughly one in every three (32 percent) of the AI-generated abstracts as human-generated. “I was surprised by how realistic and convincing the generated abstracts were,” says Gao. She is a doctor and medical researcher at Northwestern University’s Feinberg School of Medicine in Chicago, Ill.

In another study, Will Yeadon and his colleagues tested whether AI tools could pass a college exam. Yeadon is a physics teacher at Durham University in England. He picked an exam from a course that he teaches. The test asks students to write five short essays about physics and its history. Students who take the test have an average score of 71 percent, which he says is equivalent to an A in the United States.

Yeadon used a close cousin of ChatGPT, called davinci-003. It generated 10 sets of exam answers. Afterward, he and four other teachers graded them using their typical grading standards for students. The AI also scored an average of 71 percent. Unlike the human students, however, it had no very low or very high marks. It consistently wrote well, but not excellently. For students who regularly get bad grades in writing, Yeadon says, this AI “will write a better essay than you.”

These graders knew they were looking at AI work. In a follow-up study, Yeadon plans to use work from the AI and students and not tell the graders whose work they are looking at.

Cheat-checking with AI

People may not always be able to tell if ChatGPT wrote something or not. Thankfully, other AI tools can help. These tools use machine learning to scan many examples of AI-generated text. After training this way, they can look at new text and tell you whether it was most likely composed by AI or a human.

Most free AI-detection tools were trained on older language models, so they don’t work as well for ChatGPT. Soon after ChatGPT came out, though, one college student spent his holiday break building a free tool to detect its work. It’s called GPTZero.

The company Originality.ai sells access to another up-to-date tool. Founder Jon Gillham says that in a test of 10,000 samples of text composed by GPT3, the tool tagged 94 percent of them correctly. When ChatGPT came out, his team tested a much smaller set of 20 samples that had been created by GPT3, GPT3.5 and ChatGPT. Here, Gillham says, “it tagged all of them as AI-generated. And it was 99 percent confident, on average.”

In addition, OpenAI says they are working on adding “digital watermarks” to AI-generated text. They haven’t said exactly what they mean by this. But Gillham explains one possibility. The AI ranks many different possible words when it is generating text. Say its developers told it to always choose the word ranked in third place rather than first place at specific places in its output. These words would act “like a fingerprint,” says Gillham.

The future of writing

Tools like ChatGPT are only going to improve with time. As they get better, people will have to adjust to a world in which computers can write for us. We’ve made these sorts of adjustments before. As high-school student Rao points out, Google was once seen as a threat to education because it made it possible to instantly look up any fact. We adapted by coming up with teaching and testing materials that don’t require students to memorize things.

Now that AI can generate essays, stories and code, teachers may once again have to rethink how they teach and test. That might mean preventing students from using AI. They could do this by making students work without access to technology. Or they might invite AI into the writing process, as Vogelsinger is doing. Concludes Rao, “We might have to shift our point of view about what’s cheating and what isn’t.”

Students will still have to learn to write without AI’s help. Kids still learn to do basic math even though they have calculators. Learning how math works helps us learn to think about math problems. In the same way, learning to write helps us learn to think about and express ideas.

Rao thinks that AI will not replace human-generated stories, articles and other texts. Why? She says: “The reason those things exist is not only because we want to read it but because we want to write it.” People will always want to make their voices heard. ChatGPT is a tool that could enhance and support our voices — as long as we use it with care.

Correction: Gillham’s comment on the 20 samples that his team tested has been corrected to show how confident his team’s AI-detection tool was in identifying text that had been AI-generated (not in how accurately it detected AI-generated text).