Sleep helps AI models learn new things without forgetting old ones

This finding could also shed light on why people and animals sleep

Restful sleep is good for your brain. It helps your mind sort through recent experiences, which can help form new memories without overwriting old ones. But how? A new study used computer models to investigate.

SDI Productions/iStock/Getty Images Plus

Share this:

- Share via email (Opens in new window) Email

- Click to share on Facebook (Opens in new window) Facebook

- Click to share on X (Opens in new window) X

- Click to share on Pinterest (Opens in new window) Pinterest

- Click to share on Reddit (Opens in new window) Reddit

- Share to Google Classroom (Opens in new window) Google Classroom

- Click to print (Opens in new window) Print

Your body needs sleep to rest. But your brain isn’t resting when you are. It’s doing many things as you slumber, including sorting through recent experiences. This boosts your ability to learn and remember. It now turns out the same strategy can help computer brains.

Pavel Sanda is a computer scientist at the Czech Academy of Sciences in Prague. He was part of a research team that used a computer model to explore how sleep helps a brain learn. “Something very important is going on [during sleep],” he says. The team worked with a computer system that models the brain, also known as artificial intelligence, or AI.

AI models are everywhere. They recommend videos to you on social media. They can recognize your face in photos. They can even drive cars. The vast majority of these models work using what’s known as artificial neural networks, or ANNs. These are a popular form of machine learning.

ANNs were originally inspired by the networks of neurons in a living brain. But in practice, the most common ANNs are nothing like a brain. “Under the hood, they are essentially just linear algebra and math techniques,” says J. Erik Delanois. He is a graduate student in computer science at the University of California San Diego. He worked with Sanda on the new research.

Most ANNs can learn to perform one task just fine. But when it comes to learning a new task, they have a problem that brains don’t. They either fail to learn the new task, or they learn the new thing but erase most of what they knew about their original task. AI developers refer to this as “catastrophic forgetting,” explains Sanda.

But this problem doesn’t tend to affect the human brain. Sanda wondered, “How do [our] new memories get into the pool of old memories without erasing them?”

As our brains sort through new experiences during sleep, he knew, they replay those events many times. He decided to mimic this aspect of human sleep in an AI model. And it helped the AI learn new things without forgetting old ones. That “sleep” helped avoid catastrophic forgetting. Sanda’s group shared its discovery November 18, 2022, in PLOS Computational Biology.

Exploring a grid world

The model Sanda’s team used wasn’t a typical ANN. They used a type known as a “spiking neural network.” It more closely models the human brain, but takes more time and effort to train.

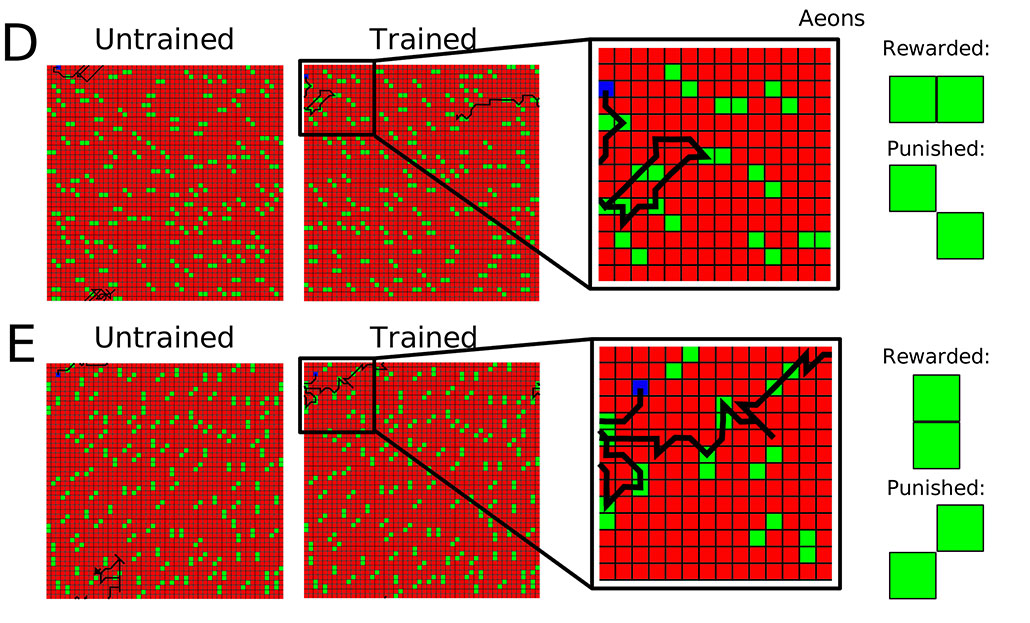

First, they trained this network on a simple task. It controlled a blue dot wandering through a grid. The grid was seeded with three types of paired green blocks. Some pairs sat side-by-side. Others sat one atop another. The third type of pair sat diagonal from each other.

For the first task, the network learned to seek out side-by-side pairs. At the same time, they had to avoid diagonal ones that sloped down. It’s as if it were an animal learning that “some berries are toxic, while some are good for you,” explains Sanda.

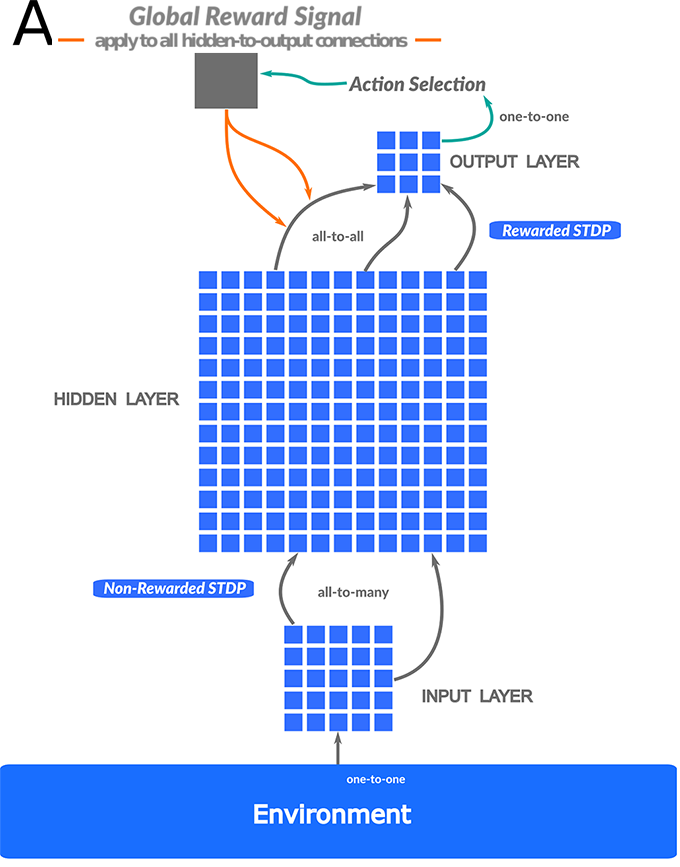

How did the network learn? It contained three layers. An input layer acted like the senses. It interacted with the grid world. The middle layer was like a grid of digital neurons. Each one learned to recognize certain patterns that the senses encountered. An output layer then made decisions based on those patterns and acted. When it moved towards the yummy berries or away from the bad berries, it got a reward. So it learned which patterns to seek out and which to avoid.

Next, the team tried teaching the network something new.

Now, stacked blocks were tasty and upward sloping diagonals were nasty. Normally, the network would have to forget its first lesson to learn this new one. However, the team didn’t try to teach it the new rules all at once. Instead, they let the AI system explore the grid for a brief time, then take a sleep-like break.

During the break, there was no grid and no shapes to encounter. So there was nothing to see and no actions to take. Still, the network hummed along. It randomly replayed the way the middle layer had responded to the world. Thanks to these sleep-like breaks, the network managed to learn both tasks well.

The importance of sleep

Mimicking sleep may not be the best way for all AI models to learn multiple tasks. A computer has no body. And many of the things that happen during sleep have to do with maintaining the health of the brain (such as flushing out wastes).

However, this research is interesting for neuroscientists, says Kanaka Rajan. She’s a neuroscientist at the Icahn School of Medicine at Mount Sinai in New York City. Rajan did not take part in the study, but she does use computer models to study how people and other animals learn. Most such research focuses on what happens while the brain is awake.

AI models of brains rarely include a sleep-like phase, she says. And now, she adds, maybe they should. The new research “is a very important first step towards understanding the importance of sleep,” she says. However, she points out, the researchers will need to add more to their model for it to better represent a true brain.

Sanda’s group hopes to figure out how sleep improves learning and memory. If it succeeds, doctors might be able to find ways to stimulate the brain during sleep to help treat memory disorders.