Can computers think? Why this is proving so hard to answer

In 1950, computer scientist Alan Turing suggested a way to find out if machines could think

Artificial intelligence does a lot of work for humans. AI can play chess, translate languages, even drive cars. But can it think? Most scientists have concluded … probably not yet.

Andrey Suslov/iStock/Getty Images Plus

Share this:

- Share via email (Opens in new window) Email

- Click to share on Facebook (Opens in new window) Facebook

- Click to share on X (Opens in new window) X

- Click to share on Pinterest (Opens in new window) Pinterest

- Click to share on Reddit (Opens in new window) Reddit

- Share to Google Classroom (Opens in new window) Google Classroom

- Click to print (Opens in new window) Print

Today, we’re surrounded by so-called smart devices. Alexa plays music on request. Siri can tell us who won last night’s baseball game — or if it’s likely to rain today. But are these machines truly smart? What would it mean for a computer to be intelligent, anyway?

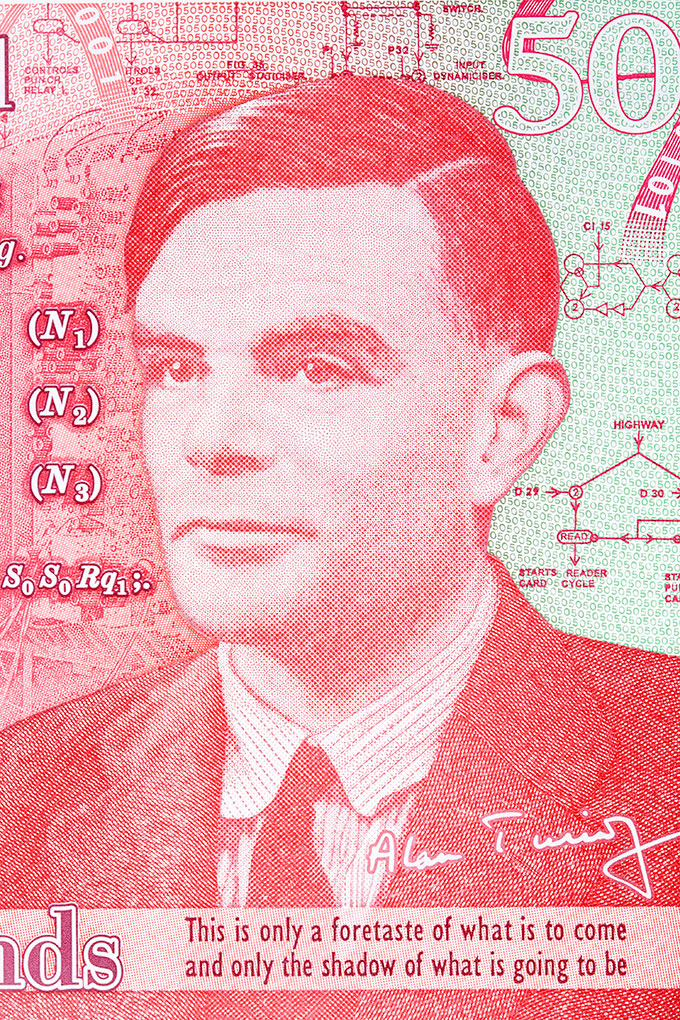

Virtual assistants may be new, but questions about machine intelligence are not. Back in 1950, British mathematician and computer scientist Alan Turing came up with a way to test whether a machine was truly intelligent. He called it the “imitation game.” Today, we call it the Turing test.

The game goes like this: Someone — let’s call this person Player A — sits alone in a room and types messages to two other players. Let’s call them B and C. One of those players is human, the other is a computer. Player A’s job is to determine whether B or C is the human.

Turing debuted his game idea in a 1950 paper in the journal Mind. He began the paper with these words: “I propose to consider the question, ‘Can machines think?’”

It was a bold question, considering computers as we now know them did not yet exist. But Turing had been working since way back in 1936 on the idea for the first computer that people could program with software. This would be a computer that could do anything asked of it, given the right instructions.

Though never built, Turing’s design led directly to today’s computers. And Turing believed that such machines would one day become sophisticated enough to truly think.

From codes toward coding

Alan Turing was a British mathematician and computer scientist who lived from 1912 to 1954. In 1936, he came up with the basic idea for the first programmable computer. That is, a computer that could do anything asked of it, when given the proper instructions. (Today, we call that package of instructions software.)

Turing’s work was interrupted during World War II when the British government asked for his help. Nazi leaders used a cypher, called Enigma Code, to hide the meaning of orders sent to their military commanders. The code was extremely difficult to break — but Turing and his team managed to do it. This helped the British and their allies, including the United States, win the war.

After the war, Turing turned his attention back to computers and AI. He started to lay out the design for a programmable computer. The machine was never built. But the 1950 British computer, shown to right, was based on Turning’s design.

But Turing also knew it was hard to show what actually counts as thinking. The reason it’s so tricky is that we don’t even understand how people think, says Ayanna Howard. A roboticist at Ohio State University, in Columbus, she studies how robots and humans interact.

Turing’s imitation game was a clever way to get around that problem. If a computer behaves as if it is thinking, he decided, then you can assume it is. That may sound like an odd thing to assume. But we do the same with people. We have no way of knowing what’s going on in their heads.

If people seem to be thinking, we assume they are. Turing suggested we use the same approach when judging computers. Hence: the Turing test. If a computer could trick someone into believing it was human, it must be thinking like one.

A computer passes the test if it can convince people that it’s a human 30 percent of the times it plays the game. Turing figured that by the year 2000, a machine would be able to pull this off. In the decades since, many machines have stepped up to the challenge. But their results have always been questionable. And some researchers now question whether the Turing test is a useful measure of machine smarts at all.

Chatbots take the test

At the time Turing suggested his imitation game, it was a just a hypothetical test, or thought experiment. There were no computers that could play it. But artificial intelligence, or AI, has come a long way since then.

In the mid-1960s, a researcher named Joseph Weizenbaum created a chatbot called ELIZA. He programmed it to follow a very simple set of rules: ELIZA would just parrot back any question it had been asked.

One of the programs ELIZA could run made her act like a psychologist talking with a patient. For example, if you said to ELIZA, “I’m worried I might fail my math test,” it might reply, “Do you think you might fail your math test?” Then if you said, “Yes, I think I might,” ELIZA might say something like, “Why do you say that?” ELIZA never said anything more than stock replies and re-wordings of what people said to it.

ELIZA never took the Turing test. But it’s possible it would have passed. Many people who interacted with it thought they were getting responses from a real expert. Weizenbaum was horrified that so many people thought ELIZA was intelligent — even after he explained how “she” worked.

In 2014, during a Turing-test competition in England, an AI chatbot program called Eugene Goostman conversed for five minutes with each of 30 human judges. It managed to convince 10 of them that it was a human. That would seem to have been enough to pass the Turing test. Eugene used a few tricks, however. In fact, some experts say the bot cheated.

Eugene claimed to be a 13-year-old Ukrainian boy. Its conversations were in English. Eugene’s youth and lack of familiarity with English could have explained some things that might otherwise have seemed suspicious. When one judge asked Eugene what music he liked, the chatbot replied, “To be short I’ll only say that I HATE Britnie Spears. All other music is OK compared to her.” Misspelling “Britney” and using the slightly odd phrase “to be short” didn’t raise suspicions. After all, Eugene’s first language wasn’t English. And his comments about Britney Spears sounded like something a teen boy might say.

In 2018, Google announced a new personal-assistant AI program: Google Duplex. It didn’t take part in a Turing-test competition. Still, it was convincing. Google demonstrated the power of this tech by having the AI call up a hair salon and schedule an appointment. The receptionist who made the appointment didn’t seem to realize she was talking to a computer.

Another time, Duplex phoned a restaurant to make reservations. Again, the person who took the call didn’t seem to notice anything odd. These were brief exchanges. And unlike in a real Turing test, the people who answered the phone weren’t intentionally trying to evaluate whether the caller had been human.

So have such computer programs passed the Turing test? Probably not, most scientists now say.

Cheap tricks

The Turing test has provided generations of AI researchers with food for thought. But it has also raised a lot of criticism.

John Laird is a computer scientist who in June retired from the University of Michigan, in Ann Arbor. Last year, he founded the Center for Integrative Cognition, in Ann Arbor, where he now works. For much of his career, he’s worked on creating AI that can tackle many different types of problems. Scientists call this “general AI.”

Laird says programs that try to pass the Turing test aren’t working to be as smart as they could be. To seem more human, they instead try to make mistakes — like spelling or math errors. That might help a computer convince someone it’s human. But it’s useless as a goal for AI scientists, he says, because it doesn’t help scientists create smarter machines.

Hector Levesque has criticized the Turing test for similar reasons. Levesque is an AI researcher in Ontario, Canada, at the University of Toronto. In a 2014 paper, he argued that the design of the Turing test causes programmers to create AI that is good at deception, but not necessarily intelligent in any useful way. In it, he used the term “cheap tricks” to describe techniques like the ones used by ELIZA and Eugene Goostman.

All in all, says Laird, the Turing test is good for thinking about AI. But, he adds, it’s not much good to AI scientists. “No serious AI researcher today is trying to pass the Turing test,” he says.

Even so, some modern AI programs might be able to pass that test.

Computing pioneers

Fill in the blanks

Large language models, or LLMs, are a type of AI. Researchers train these computer programs to use language by feeding them enormous amounts of data. Those data come from books, articles in newspapers and blogs, or maybe social media sites such as Twitter and Reddit.

Their training goes something like this: Researchers give the computer a sentence with a word missing. The computer has to guess the missing word. At first, the computer does a pretty lousy job: “Tacos are a popular … skateboard.” But through trial and error, the computer gets the hang of it. Soon, it might fill in the blank like this: “Tacos are a popular food.” Eventually, it might come up with: “Tacos are a popular food in Mexico and in the United States.”

Once trained, such programs can use language very much as a human does. They can write blog posts. They can summarize a news article. Some have even learned to write computer code.

You’ve probably interacted with similar technology. When you’re texting, your phone may suggest the next word. This is a feature called auto-complete. But LLMs are vastly more powerful than auto-complete. Brian Christian says they’re like “auto-complete on steroids.”

Christian studied computer science and philosophy. He now writes books about technology. He thinks large language models may have already passed the Turing test — at least unofficially. ”Many people,” he says, “would find it hard to tell the difference between a text exchange with one of these LLMs and one with a random stranger.”

Blaise Agüera y Arcas works at Google in Seattle, Wash., designing technologies that use AI. In a paper in Daedalus in May, he describes conversations he had with LaMDA, an LLM program. For instance, he asked LaMDA if it had a sense of smell. The program responded that it did. Then LaMDA told him its favorite smells were spring showers and the desert after a rain.

Of course, Agüera y Arcas knew he was chatting with an AI. But if he hadn’t, he might have been fooled.

Learning about ourselves

It’s hard to say whether any machines have truly passed the Turing test. As Laird and others argue, the test may not mean much anyway. Still, Turing and his test got scientists and the public thinking about what it means to be intelligent — and what it means to be human.

In 2009, Christian took part in a Turing-test competition. He wrote about it in his book, The Most Human Human. Christian was one of the people trying to convince the judges that he wasn’t a computer. He says it was a strange feeling, trying to convince another individual that he was truly human. The experience started out being about computer science, he says. But it quickly became about how we connect to other people. “I ended up learning as much about human communication as I did about AI,” he says.

Another major question facing AI researchers: What are the impacts of making machines more human-like? People have their biases. So when people build machine-learning programs, they can pass their biases on to AI.

“The tricky part is that when we design a model, we have to train it on data,” says Anqi Wu. “Where does that data come from?” Wu is a neuroscientist who studies machine learning at Georgia Tech University in Atlanta. The huge amount of data fed into LLMs is taken from human communications — books, websites and more. Those data teach AI a lot about the world. They also teach AI our biases.

In one case, AI researchers created a computer program that could do a sort of math with words. For example, when given the statement “Germany plus capital,” the program returned the capital of Germany: “Berlin.” When given “Berlin minus Germany plus Japan,” the program came back with the capital of Japan: “Tokyo.” This was exciting. But when the researchers put in “doctor minus man,” the computer returned “nurse.” And given “computer programmer minus man,” the program responded “homemaker.” The computer had clearly picked up some biases about what types of jobs are done by men and women.

Figuring out how to train AI to be unbiased may improve humanity as much as it improves AI. AI that learns from our websites, posts and articles will sound a lot like we do. In training AI to be unbiased, we first have to recognize our own biases. That may help us learn how to be more unbiased ourselves.

Maybe that’s the really important thing about the Turing test. By looking closely at AI to see if it seems like us, we see — for better or worse — ourselves.